As someone who has spent most of my career designing and building websites, I tend to think of interaction design within the realm of explicit interactions.

This means that the user is explicitly paying attention to the interface on a screen, and will generally initiate any actions or processes in the program. They will be deciding to do things either through free will, habit, or because the program has prompted them to take action.

That means that I expect users to interact directly with a Graphical User Interface (GUI), using either a mouse and keyboard or perhaps by directly manipulating content or controls on the screen with a finger.

We think about accessibility, but only in a very limited sense in terms of constraints. The emotional and sociological aspects of designing services are usually not considered, or fit into a very narrow paradigm.

The Internet of Things

As we embed internet connectivity and computing power into everyday physical objects and devices, we build the Internet of Things (IOT). All of these objects will have an IP address, and can potentially talk to each other through APIs.

Combined with falling costs of sensors, feedback technology, gestures, voice control and machine learning we have an almost limitless playground of interconnected devices and services which can operate in the background without our intervention, and interface with us when necessary.

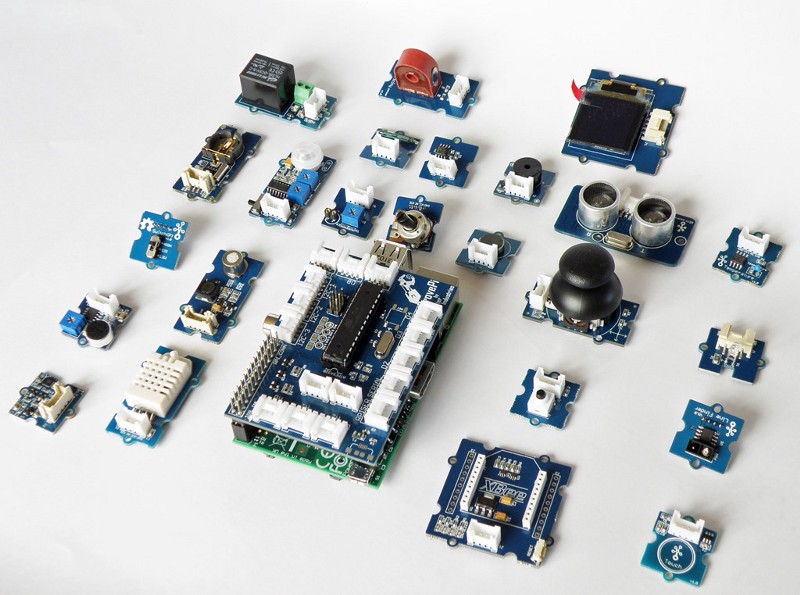

Sensors

We now have affordable sensors available for sensing all kinds of data about our environment: movement, location, audio, speech, tactile, biometrics, temperature, light levels..the list goes on! These can be embedded in our environment where they can unobtrusively monitor us and the periphery.

Feedback

We can give other forms of feedback rather than just on-screen visuals — haptic, audio, ambient…

Gestures

We now have a wide variety of sensors available to capture more natural gestures that we can use to interact with technology. Examples are Microsoft Kinect, Leap Motion, Google’s Soli project.

Voice Recognition

Voice recognition has become incredibly accurate (thanks to machine learning), in fact Microsoft researchers claim that we are approaching parity with human voice recognition. So we can now give voice commands, ask questions, and intelligent agents will be able to respond.

Machine Learning

With more powerful (cloud-based) software, our software and the devices that run on them can learn to recognise and act on patterns of behaviour and data. An example of this is the Nest thermostat, which learns your patterns gradually and reduces the need for you to interact with an interface.

Implicit interactions

Much has been written about the coming zombie apocalypse of smart devices, and in our future IOT scenarios we cannot expect to be able to explicitly interact with, or take notice of, every app or device.

Many of these interactions are likely to be displaced in location or time, which means that the interactions will not be direct.

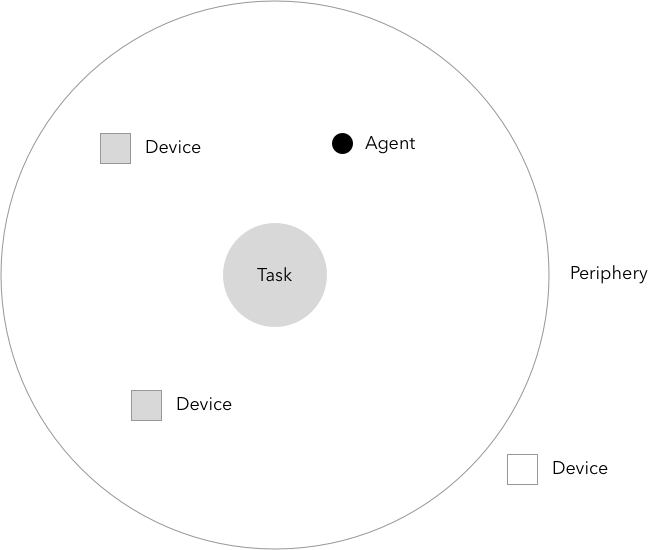

The interactions will also need to be aware of context, which is where things become really interesting and valuable. What devices are talking to each other? What is happening in the periphery of the user? Which agent will act to make a change in the system?

This is when we start thinking about implicit interactions, so some of our interactions can now move into the background, and the periphery, where there is more smart automation and invisible processes are being carried out without burdening the user.

Also we can affect systems without directly manipulating them through a GUI— for example through gestures, presence or voice control.

A simple example, if you look at the diagram below, could be an IOT intelligent light switch. When a person enters the room the lights turn on (bottom right). The person might decide that they want dimmer lights, so they explicitly override the system and turn the light down from a switch or with a gesture (top left). When they are away, perhaps they might want the lights to turn on at night anyway so burglary is discouraged (bottom left). And finally, the system could send a notification when the light bulbs are getting close to needing replacement (top right).

We also need to consider how to move gracefully between these states of interaction. Seamless interactions may not always be possible due to the unpredictability of networks, but we can design for continuity between IOT touch points.

Emotech

We now have the capability to measure emotions — and this can be far more valuable than hard metrics. What is the value of a metric if you don’t know how much of it is fuelled by positive versus negative emotions? Now you can drive interactions in an empathetic way, instead of attention-overload indifference.

Social Interaction and making worlds

We can now also consider the emotional, sociological and behavioural aspects of how people interact with each other and their environments. Architects do this naturally as part of their work, and it now behooves Interaction Designers to think about how environments can adapt or become something new in different contexts. Spaces become places, an appearance becomes a presence.

Rethinking Accessibility and Disability

This means that perhaps, instead of thinking about inclusive design problems in terms of the limitations or constraints, we can think about how to extend our human capabilities to enhance our lives.

A tool addresses human needs by amplifying human capabilities

Summary

For Interaction and UX Designers who have worked for a long time with screen-based interactions, it is really exciting to think about wider models of interaction and devices that are embedding themselves into the physical and social spaces we inhabit everyday.

Interacting with screens is not going to go away, and in many contexts it is still the most appropriate model. However, it is still beneficial to consider how any application or service might fit into a wider ecosystem — and whether you always need to have the explicit attention of your users.

References and links

References and links

- http://worrydream.com/ABriefRantOnTheFutureOfInteractionDesign/

- UX for the internet of things

- The Design of Implicit Interactions

- http://www.billbuxton.com/BG_FG.html

- http://www.meetup.com/IxDA-London/events/234261722/

- http://thenextweb.com/microsoft/2016/10/18/microsofts-speech-recognition-is-now-just-as-accurate-as-humans/

- http://interactions.acm.org/archive/view/september-october-2015/after-interaction